If you’ve been around the tech industry for more than two or three weeks, you’ve probably heard the word “lean” used in some context. It may have been in the wrong context, but you heard it nonetheless.

Many people have jumped on the bandwagon without ever really understanding it while others have written it off as a hipster trend that will pass as quickly as flat design. I don’t know where the Lean movement will go but I know that the core idea that it’s built on is here to stay. I can sum up the Lean movement in one phrase:

You don’t know your end user half as well as you think you do.

Everything you think you know about your customer is great. But what if it’s wrong? What if your design firm’s recommendations are wrong? What if your market research is outdated the day you get back the report?

One of the beautiful things about the internet is the vast access to raw data. I was in Old Navy the other day and was hit by the true beauty of what the internet gives us access to. If I was the manager at Old Navy and wanted to test out a new design for my displays, I may have to go through countless rounds of design revisions and approvals in order to roll out the new designs. After I’ve rolled them out though, how do I know if they’re working any better (or worse) than my old ones? Most of the people that come in won’t remember what the old ones looked like and most won’t care if I ask them. That doesn’t mean that it doesn’t have an impact though.

If I really want to know which one works better, I need something more scientific. I need a steady flow of traffic and I need to randomly show each customer a different variation. Then I need to know how many people purchased the item that saw the original sign and how many people purchased the item that saw the new sign. Sounds expensive and exhausting.

This is one of the reasons that I love working in the tech space. Something that used to take months (or years) of research, surveys, and customer interviews, can now be tested in weeks (or days) with minimal effort.

Here at Metova, we’ve been working to optimize our site over the last couple months. One of our favorite tools in our toolbelt is A/B testing. It’s essentially what I’ve described in the Old Navy example. The steps are quite simple:

- Come up with a small change that will potentially improve your conversions

- Present your original version to 50% of your traffic and your new version to the other 50%

- Run your test and monitor your conversions (this step takes longer to provide good data depending on the traffic you have coming to your site)

- Implement the winner

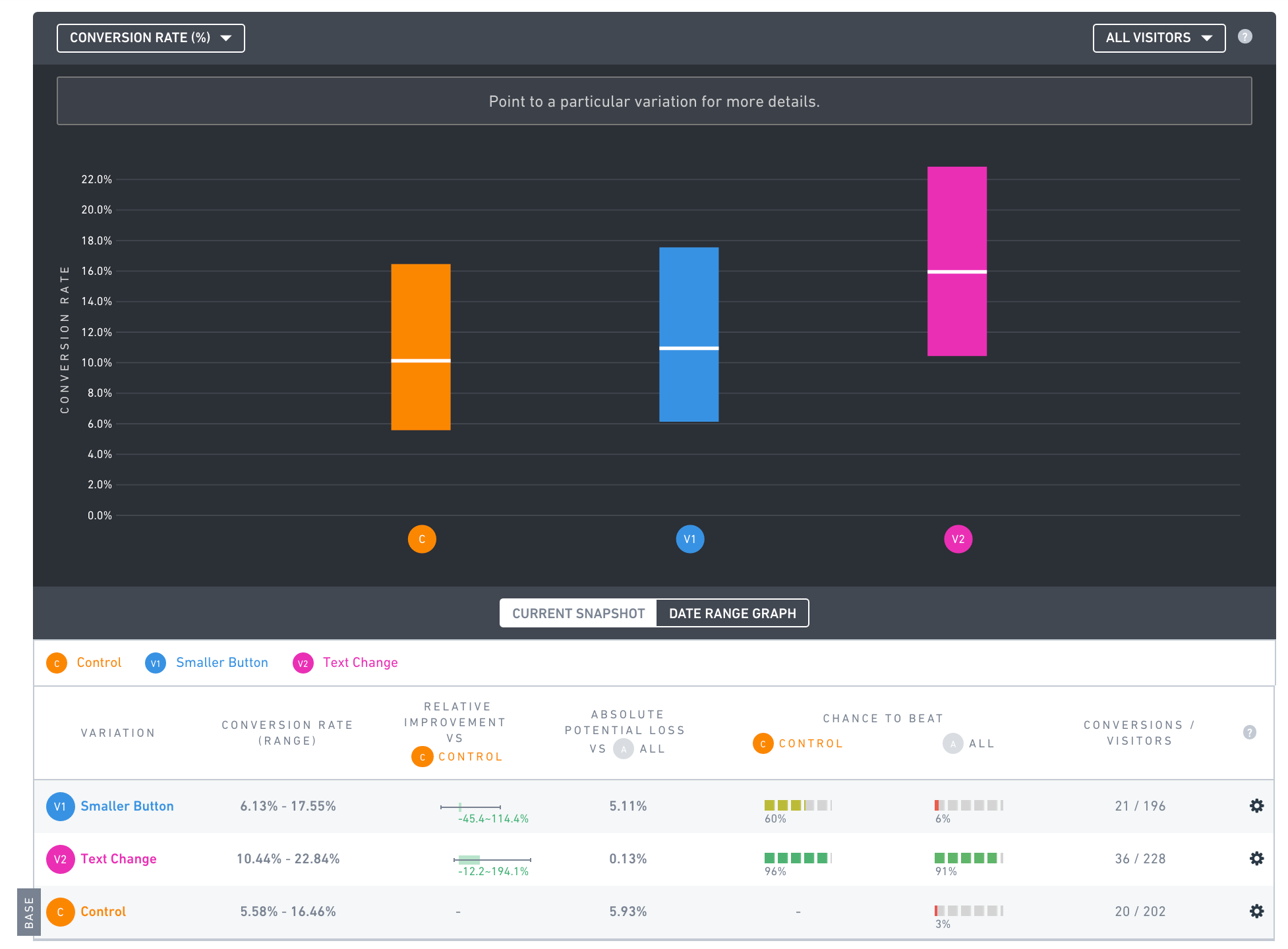

I’ll share with you a recent example from our home page. One of the lowest hanging A/B testing fruits is the infamous call-to-action button. Our hero call-to-action takes our visitors to the contact form to get in touch with us. I created a campaign with three variations (Control, Smaller Button, Text Change). After one week here are the results:

The winning variation is a simple text change. By changing “Tell us about your project” to “Start my project today”, we saw an 80% increase in click throughs per visitor.

If we weren’t tracking our changes and just making decisions based on feeling or intuition we would have had a hard time proving that the new version is actually better than the original. A/B testing is a safety net that you can’t afford not to have.

Many design firms will propose multi million dollar website redesigns that end up making little impact on your bottom line. In some cases full redesigns actually perform far worse than the original. What we have started proposing (and doing on our own site) is a simple stepped approach. Make small, iterative, data-backed changes to your site to get more of whatever it is you want (e.g. purchases, registrations, form submissions, etc…).

If you want help making your site perform like a well oiled machine, talk with us today and we’ll see if A/B testing makes sense for you!