Concurrency is a condition in a program where two or more tasks are defined independently, and each can execute independent of the other, even if the other is also executing at the same time. In programming concurrency can mean several computations, data loads, or instructions are being handled relatively at the same time, through a single-core or multiple-core processor.

With a single-core processor, concurrency is achieved by context switching, which uses a time multiplex switches between threads quick enough to provide multi-tasking (to a user single-core multi-tasking looks seamless, the change is fast enough to seem like both processes are running at the same time). On a multi-core processor parallelism is achieved by allowing each thread to run on its own core (each thread has its own allocated space to run the process). Both of these techniques follow the notion of concurrency.

In iOS development, we leverage multi-threading through the tools provided to us by Apple, in order to allow our application to perform tasks and queues with the goal of increasing our performance. It is important to note that concurrency is difficult, and analyzing what exactly needs to be optimized is important in doing before going through with adding concurrency.

Identify if you are trying to increase throughput, user-responsiveness, or latency, to make your application better. There are third-party libraries like AFNetworking that build on the asynchronous use of concurrency in NSURLConnection, and other tools to simplify the process.

Queues Types

Defining the type of queue is important in achieving the results you want, and will help prioritize the way your blocks of code are executed.

Synchronous: The current thread will be blocked while execution is happening

Asynchronous: Tasks will be run on a background thread, keeping the current thread free for other tasks

Serial: Serial queues execute the blocks one at a time, in the order of which they were entered (FIFO – First In First Out).

You can create more than one serial queue; if you had four serial queues, they could be running all four tasks at the same time asynchronously, the advantage being that you can control the order(FIFO) in which the blocks of code execute, and they are thread-safe on each Serial Queue.

Concurrent: Concurrent queues have the potential to execute the blocks together, (with a default of 2 blocks at a time), allowing processes to be run in parallel. The disadvantage being that you might not have perfect control over which tasks finish first.

NSOperation & NSOperationQueue

NSOperation and NSOperationQueue are a high level abstraction of GCD in order to provide more control and specifications around concurrency. The ability to cancel an operation, and add priorities and dependencies to your operations, gives you control in how your application functions.

Types of NSOperation:

- NSOperation: Inherits from NSObject, allowing us to add our own properties and methods if we want to subclass it. It is important to make sure the properties and methods are thread safe as well.

- addDependency: Allows you to make one NSOperation dependent on another, as you will see in an example below.

- removeDependency: Allows the removal of a dependency operation.

- NSInvocationOperation: Returns a non-concurrent operation on a single specified object.

- NSBlockOperation: Manages the concurrent execution of blocks. This allows you to execute multiple blocks at once without creating separate operations for each one, and the operation is finished when all blocks have completed executing.

NSOperation

The NSOperation class has states in order to help you receive information on your operation. You can use KVO notifications on those key paths to know when an operation is ready to be executed through boolean calls.

- Ready: Returns true when ready to execute, or false if there are still dependencies needed.

- Executing: Returns true if the current operation is on a task, and false if it isn’t.

- Finished: Returns true if the task has executed successfully or if it has been cancelled. An NSOperationQueue does not dequeue and operation until finished changes to true. It is important to implement dependencies and finish-blocks correctly to avoid deadlock.

NSOperation Cancellation

Allows for an operation to be cancelled during operations. It is possible to cancel all operations in NSOperationQueue, this stops all operations in the queue.

override func main() { for pair in inputArray { if cancelled { return } outputArray.append(pair) }}

In order to effectively cancel an execution, it is important to have a check within the main method of the operation to check for cancellation. You can additionally add a progress function, to check the amount of data that has been processed before cancellation.

NSOperationQueue

Regulates the way your operations are handled in a concurrent manner. Operations with a higher priority will be executed with precedence over those with a lower priority. An enumeration is used to state the priority cases.

public enum NSOperationQueuePriority : Int { case VeryLow case Low case Normal case High case VeryHigh}

NSBlockOperation

NSOperationQueue *queue = [[NSOperationQueue alloc] init];NSBlockOperation *op1 = [NSBlockOperation blockOperationWithBlock:^{NSLog(@"op 1");}];NSBlockOperation *op2 = [NSBlockOperation blockOperationWithBlock:^{NSLog(@"op 2");}];NSBlockOperation *op3 = [NSBlockOperation blockOperationWithBlock:^{NSLog(@"op 3");}];//op3 is executed last after op2,op2 after op1[op2 addDependency:op1];[op3 addDependency:op2];[queue addOperation:op1];[queue addOperation:op2];[[NSOperationQueue mainQueue] addOperation:op3];Result: op1, op2, op3 - always in that order, you will know the operation is complete once op3 finishes.

Key Value Compliant (KVC) and Key Value Observing (KVO)

NSOperation is both KVC and KVO. These features alert other areas of your application on the state of operations. NSOperations relies on these notification systems to understand the current state of operations.

-

NSOperation Life Cycle

- isReady: read-only – operation is ready

- isExecuting: read-only – operation is executing

- isFinished: read-only – operation is finished

- isCancelled: read-only – operation is cancelled

-

Queue Properties

- queuePriority:read-and-write

- completionBlock: read-and-write

- isAsynchronous: read-only

- dependencies: read-only

Example of KVO:

var state = State.Ready { willSet { willChangeValueForKey(newValue.keyPath) willChangeValueForKey(state.keyPath) } didSet { didChangeValueForKey(oldValue.keyPath) didChangeValueForKey(state.keyPath) } }

In the example above we can see that there are 2 instances set of “willChangeValueForKey” and “didChangeValueForKey”. We require two instances because two states will always be changing when operations switch. For example when going from ready to executing, the ready state will be set to false, and the executing state will be changed to true.

Dependencies

With an application, it is important to understand the architecture you want to use when any event is handled. With dependencies we can add more control over how things are executed, when they are executed, and when they are not executed.

For example the picture below describes the dependencies required to “Save Favorite”. We can see that we would want to make sure that a user is logged in, and that the favorite is saved to the users information, and their cloudkit account. We can specify the operation of “Save Favorite” to be a dependency of User Info, and User Info to be a dependency of being Logged in.

let userOperation: NSOperation = ...let favoriteOperation: NSOperation = ...favoriteOperation.addDependency(userOperation)let operationQueue = NSOperationQueue.mainQueue()operationQueue.addOperations([userOperation, favoriteOperation], waitUntilFinished: false)

An operation will not be started until all of its dependences (NSOperation Status) have returned true or finished. You cannot “Save Favorite” until “User Info” is set to true.

It is important to make sure you do not create a dependency cycle or deadlock by having two operations be dependent on each other finishing.

Grand Central Dispatch (GCD)

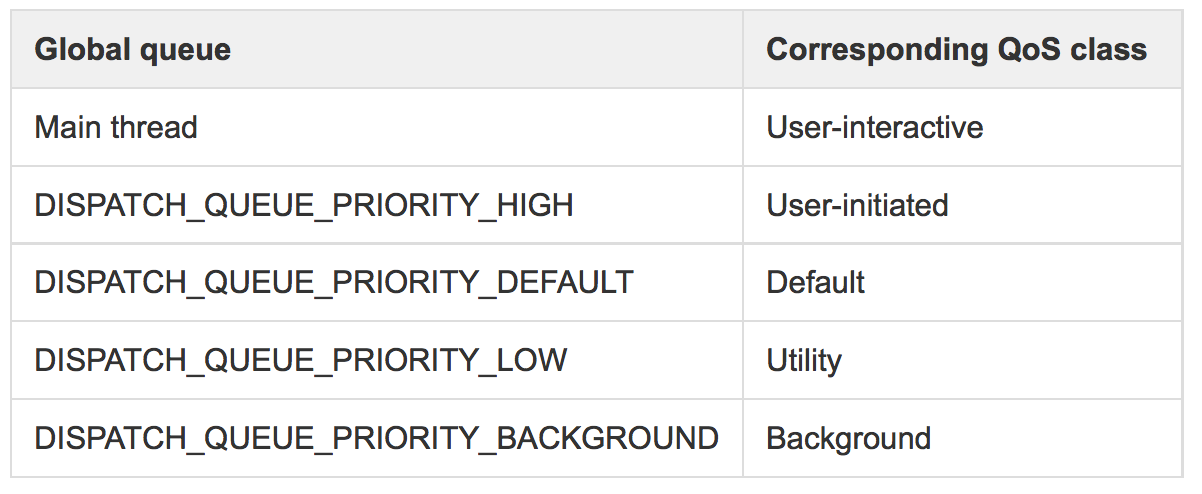

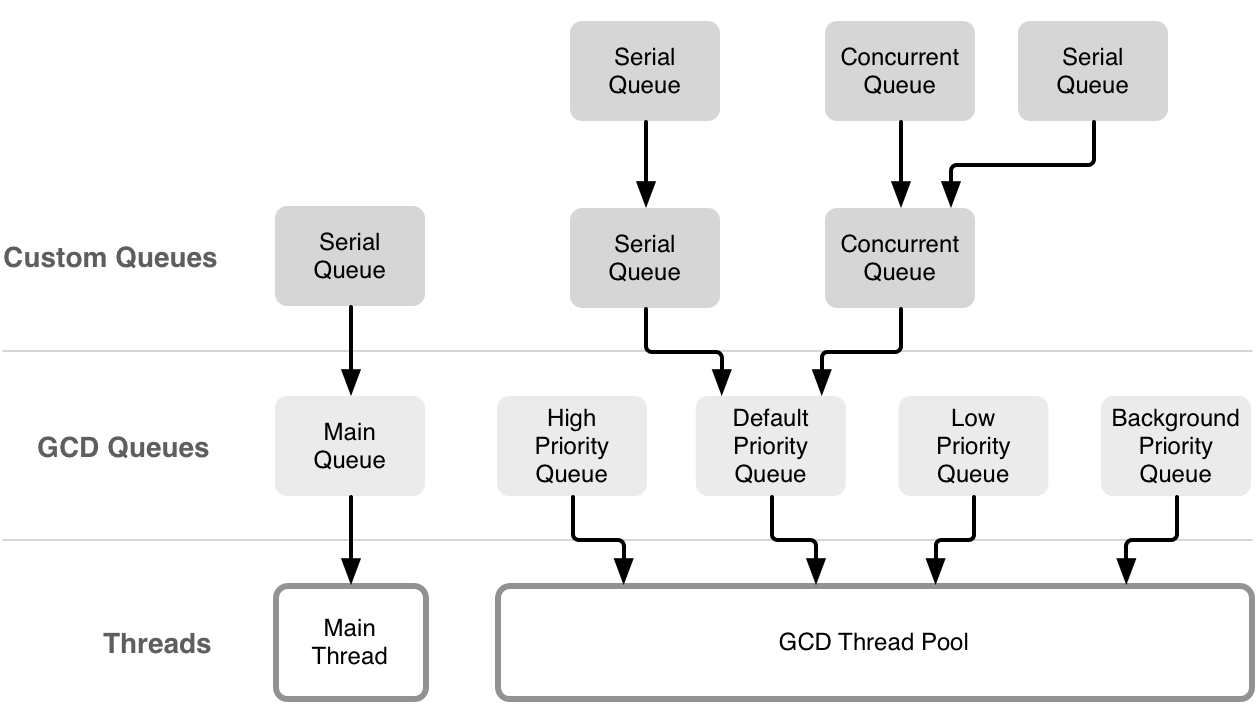

GCD is Apple’s solution to simplifying multi-threading. GCD is a low C level library. GCD utilizes a Main thread, and 4 Priority Queues to handle multi-threading. The 4 Queues are organized by priority levels. It is important to note that the 4 Priority types (High, Default, Low, Background) listed have now been replaced with Quality of Service. Quality of Service can be used for both GCD and NSOperations.

Dispatch Queues

A dispatch queue is used to handle blocks of code that we want to send to a resource. Below are the 5 global queues, and their corresponding QoS class, which provides more specific definitions of their functionality.

You can additionally create your own custom queues to handle additional dispatches that you may want to use. Quality of Service helps determine the priority used on queues as well, you can read about that further below.

GCD uses FIFO (First In First Out) to handle all of its queues; this formatting means that the first task entered into the queue will also be the first task to start. Your goal is to ensure the right dispatch queue is used with the right dispatch function.

Image used with permission from objc.io

Dispatch Types

Return the queue on which the current block is executing on:

Additional types:

- dispatch_async: Function immediately returns, task will execute in the background and complete sometime in the future.

- dispatch_sync: Executes synchronously, function won’t return until the task is completed, and blocking the current thread. Additionally this doesn’t mean that it is handled immediately, it will be added to the queue and the dispatcher will choose when it executes. Use with caution as you can deadlock by using this queue type.

- dispatch_once: Executes a block once, and only once for the entire lifespan of the application. It is used to initialize singletons in the application. If this is called from multiple threads it is a synchronous process in which it waits until the block has completed.

The full list can be found here.

Dispatch Groups

Dispatch groups allow us to group operations together, in order to track when a group of them have finished, and manage them in a cohesive unit.

When you dispatch a task you provide a queue, and a group. The group would have the same affect as the coloring scheme above. You can also check when a group finishes, displayed with the dotted lines above.

let group1 = dispatch_group_create() //Creates a group - can reference in future functionsdispatch_group_async(group1, workerQueue) { //set async task to the group print("Task 1")}dispatch_group_notify(group,dispatch_get_main_queue()) { //notify upon completion print("Task 1 has completed")}

Above we created a group with “dispatch_group_create”, we then use “dispatch_group_sync” to run each group. We use “dispatch_group_notify” to let us know when the groups have finished.

How to get the name of the current queue:

func currentQueueName() -> String? { let label = dispatch_queue_get_label(DISPATCH_CURRENT_QUEUE_LABEL) return String(CString: label, encoding: NSUTF8StringEncoding)}let currentQueue = currentQueueNameprint(currentQueue)

No exceptions in GCD

Because GCD doesn’t catch exceptions, it is our responsibility to throw exceptions in our blocks, or use try/catch blocks in our code.

dispatch_get_global_queue( DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), { do { try block() } catch { print("Error during async execution") print(error) } }

An example of utilizing a dispatch request would be when calling an asynchronous networking request in order to handle data being loaded in the background, while allowing the Main Thread or UI of the application to be unaffected.

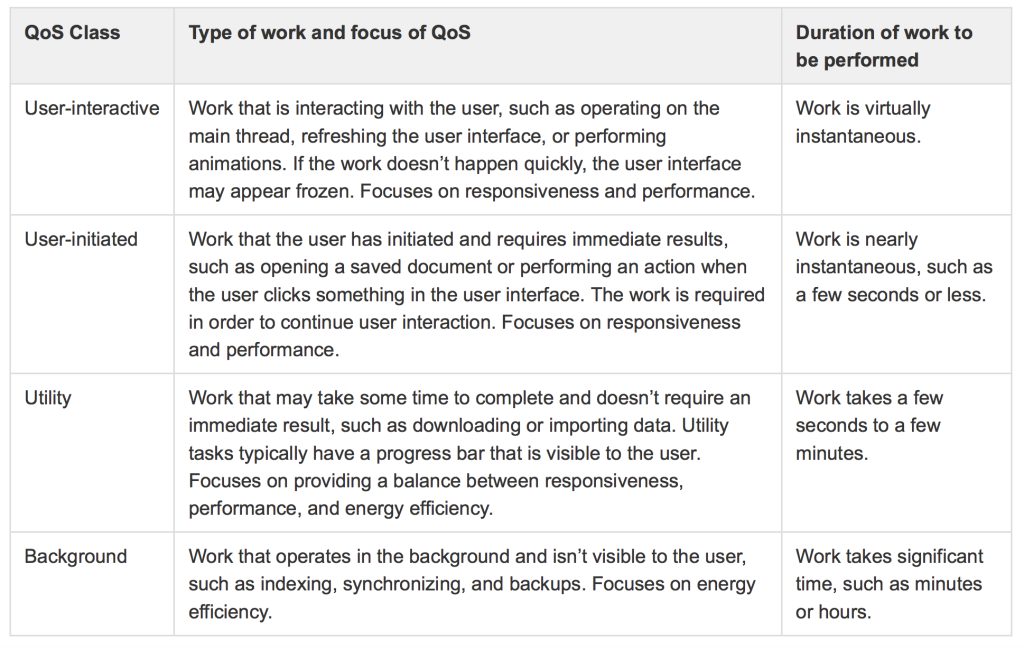

Quality of Service

Quality of Service is used for both NSOperations and GCD Queues. QoS establishes a system-wide priority on which resources (CPU, Network, Disk) to allocate to what areas. A higher quality of service means more resources will be used.

Important: QoS is not a replacement for dependencies, as you can’t set perfect order simply through QoS, you can only tell the system how it should allocate it.

@available(iOS 8.0, OSX 10.10, *)public enum NSQualityOfService : Int { case UserInteractive case UserInitiated case Utility case Background case Default}

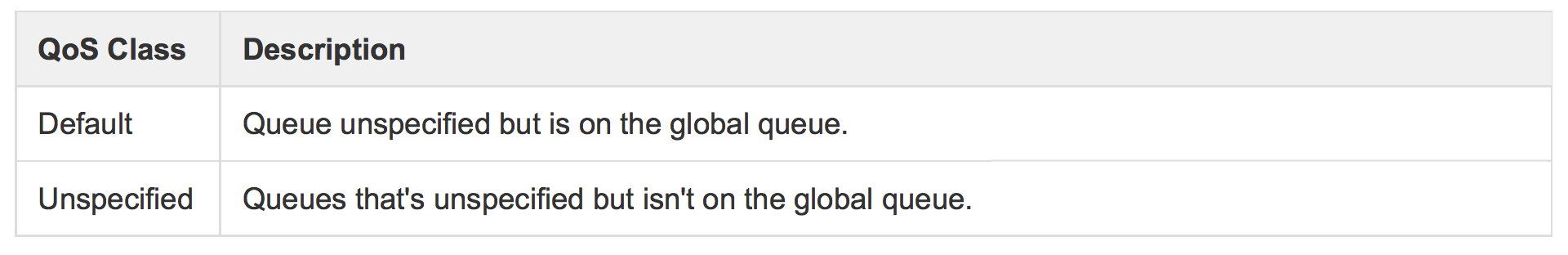

From Apple:

There are also two classes that are less frequent but are good to be aware of, they occur when you don’t specify the QoS class.

NSOperation Example of QoS:

Here the operation is being set to a QoS of Utility, which means that we don’t require an immediate result, and the task will be done in the background.

NSOperation *operation = ..operation.qualityOfService = NSQualityOfServiceUtility;

GCD Example of QoS:

Here our dispatch queue is set to a QoS of User Interactive or UI. QoS UI means that we require it to draw on the interface, expect a direct result. These tasks could be animating a login, or adding a comment on Social Media.

qos_attr = dispatch_queue_attr_make_with_qos_class(attr, QOS_CLASS_USER_INTERACTIVE, 0);queue = dispatch_queue_create("com.YourApp.YourQueue", qos_attr);

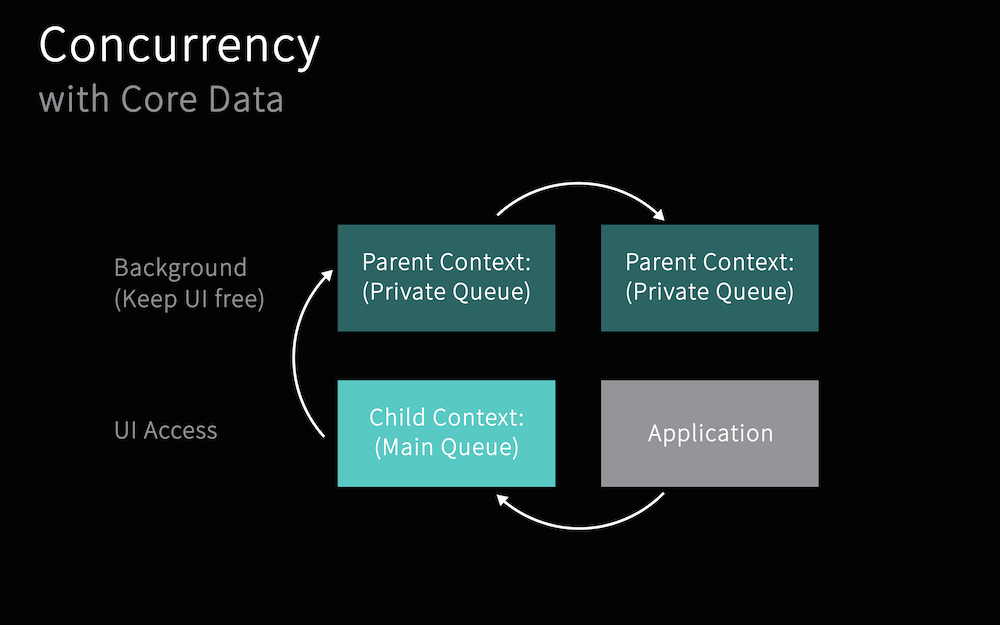

Core Data and Concurrency

For Core Data, concurrency means the ability to work with data on more than one queue at a time. It is important to note that UIKit is not thread-safe. Core Data expects to run on a single thread to be safe. UIKit operations should be performed on the main thread.

Core Data Basics:

- NSManagedObject: is a generic class that implements all the basic behavior required of a Core Data model object. These will be connected to the persistent store, via the main or private queue.

- NSManagedObjectContext (MOC): This is a single space or object space (scratch-pad) in the application used to manage a collection of Managed Objects.

- NSPerseistentStoreCoordinator: This is used to save object graphs created by NSManagedObjectContext to a persistent store, and can retrieve model information. Coordinators serialize operations, in order for us to have concurrency we must use multiple coordinators.

- NSMainQueueConcurrencyType: This is used for contexts linked to controllers and UI objects that are to be used only on the main thread.

- NSPrivateQueueConcurrencyType: This manages a private queue. Here background processing, importing/exporting data should be taken care of.

Concurrency in Core Data:

To Achieve concurrency in core data we set up a connection between our private and main queue. The persistent store will be connected to a Managed Object Context on the Private Queue (“Parent”) and the actual process will be on the Managed Object Context in the Main Queue (“Child”), saving the data on the Main Queue will push it up to the Private Queue (“Parent”), this is to keep the application running quicker. When we decide we want to save the data to the Persistent Store Coordinator, we call the function on the Private Queue, keeping it asynchronous, and saving the “heavy save” from being on the Main Queue, and not blocking the User Interface.

NSManagedObject

Although this class isn’t thread-safe, we can utilize its objectID in order to pass an instance of the class. NSManagedObjectID is thread safe, and it contains the information we need.

// Object ID Managed ObjectNSManagedObjectID *objectID = [managedObject objectID];

What to Watch for

Critical Section

Is a block of code that must not be executed concurrently. Due to the code changes, a shared resource (variable) that can be corrupted if accessed by two different areas at the same time.

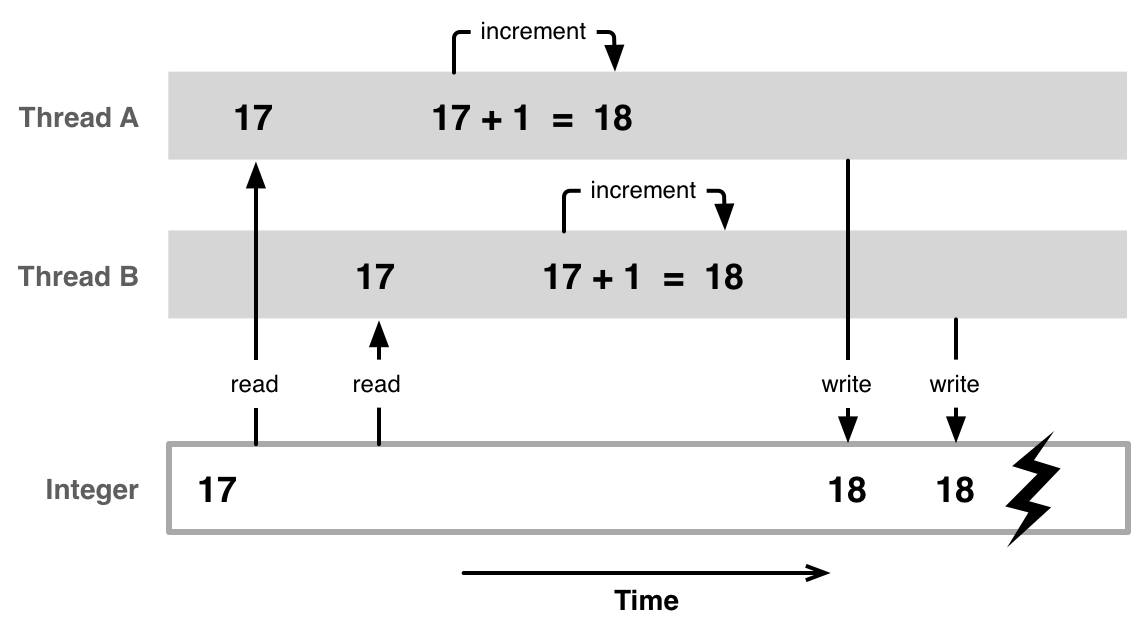

Race Condition

A result of accessing a “Critical Section” of code at the same time is the Race Condition. Two threads read the variable 17 below, however they both complete operations on the variable, and the last one to finish over writes any other operations that happened. In the example below the final result should be 19, but because they Raced only the last change was pasted and 18 was incorrectly marked as the result. This can go unnoticed so it is important to be aware of.

Image used with permission from objc.io

Deadlock

A deadlock occurs when two threads get stuck waiting for each other to finish. For the example below, Thread A and Thread B are requesting each others resources, and are dependent on each other’s completion or neither thread will be able to continue. A Serial Queue would be a solution to deadlock because the tasks will be executed in single order, one at a time.

Thread Safety

Certain types of code are thread safe. A general rule is that if a code is immutable (un-changable) it is thread safe, because the variable cannot change while being accessed concurrently by more than one block of code. Code that is not thread safe – mutable (changeable) is best handled through Serial Queues, so that only one block of code is requesting access to the variable at a time.

A list of Thread-Safe Classes and Functions (and Thread-Unsafe) can be found in Apple’s Documentation.

Priority Inversion

Is where a task with a lower priority is locking access to a queue while a higher priority requires it.

A real life example of priority inversion was with the Mars Pathfinder. Priority inversion caused the software of the rover to continuously reset, losing the data of each day that it was collecting. Utilizing priority inheritance, they were able to resolve the problem.

An example of priority inversion is set below:

- A low priority task access the shared resource and locks it to avoid race condition

- A medium priority task comes and takes precedence over the low one, puts it to rest, however the shared resource is still locked by the low priority task.

- A high priority task sleeps the medium task, the high priority task tries to access the resource but it is still locked in by the low priority task.

- Control then drops down to the medium task to continue running – this is priority inversion, because the medium task has priority over the high priority task. We see this in the middle of the graph where the orange is highlighted while the red is faded out.

Potential Solutions

- – Priority inheritance: The basic idea of the priority inheritance protocol is that when a job blocks one or more high-priority jobs, it ignores its original priority assignment and executes its critical section at an elevated priority level. After executing its critical section and releasing its locks, the process returns to its original priority level.

- -Dependencies and Serial Queuing will help mitigate the risks of priority inversion, while providing clarity to your NSOperation’s, by allowing for a single file execution of your threads on the shared resource, and not locking the shared resource for other tasks.

Context Switching

Occurs when an operation has its execution turned on and off, in order to allow multiple processes to be handled on a single-core. Context switching gives the ability for multi-tasking by switching rapidly through threads.

True Parallelism on the other hand can happen with a multi-core processing, in which each thread has its own processor to execute on.

Want to build an IOS app?

1 comment

Thank you for this article!

Maybe in this part about “Priority Inversion”, instead of “…access to a queue..” should be “… access to a shared resource…” ?

“Is where a task with a lower priority is locking access to a queue while a higher priority requires it.”

Thanks in advance!

Comments are closed.